About me

I’m a second year PhD student in the Department of CST at University of Cambridge, advised by Prof. Rafal Mantiuk. My current research interest lies in the intersection field of Computer Graphics and Machine Learning. My research background includes content adaptive rendering and real-time 3D Reconstruction.

Previously, I obtained my B.Sc. degree in pure math at University of Toronto, and Msc. degree in computer engineering at McGill University, where I was gratefully supervised by Prof. Derek Nowrouzezahrai and Prof. Morgan McGuire.

Beyond research, I enjoy reading, fashion, and the arts. I’m particularly interested in psychoanalysis as well as both Eastern and Western philosophy.

Feel free to reach out via email if you’re interested in research collaboration❤️

News

[June, 2025] I’m glad to be selected as a finalist for the Qualcomm Innovation Fellowship Europe 2025!

[March, 2025] I’m honored to receive the Rabin Ezra Scholarship Trust 2025 Award!

Research

NeuMaDiff: Neural Material Synthesis via Hyperdiffusion

Chenliang Zhou, Zheyuan Hu, Alejandro Sztrajman, Yancheng Cai, Yaru Liu, Cengiz Oztireli, Submitted to ICCV 2025

NeuMaDiff is a novel neural material synthesis framework utilizing hyperdiffusion. The method employs neural fields as a low-dimensional representation and incorporates a multi-modal conditional hyperdiffusion model to learn the distribution over material weights. This enables flexible guidance through inputs such as material type, text descriptions, or reference images, providing greater control over synthesis.

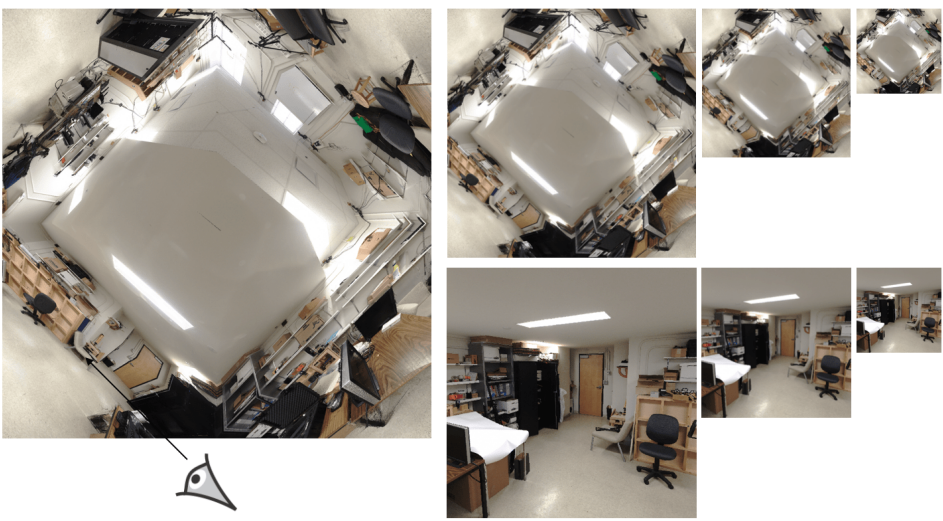

Real-Time Scene Reconstruction using Light Field Probes

Yaru Liu, Derek Nowrouzezahrai, Morgan McGuire, I3D 2024, Poster

Our research explores novel view synthesis methods that reconstruct complex scenes without relying on explicit geometry data. Our approach leverages sparse real-world images to generate multi-scale implicit representations of scene geometries. A key innovation is our probe data structure, which captures highly accurate depth information from dense data points. This allows us to reconstruct detailed scenes at a lower computational cost, making rendering performance independent of scene complexity. Additionally, compressing and streaming probe data is more efficient than handling explicit scene geometry, making our method ideal for large-scale rendering applications.

Talks

Streaming of rendered content with adaptive frame rate and resolution, University of Cambridge, May 2025